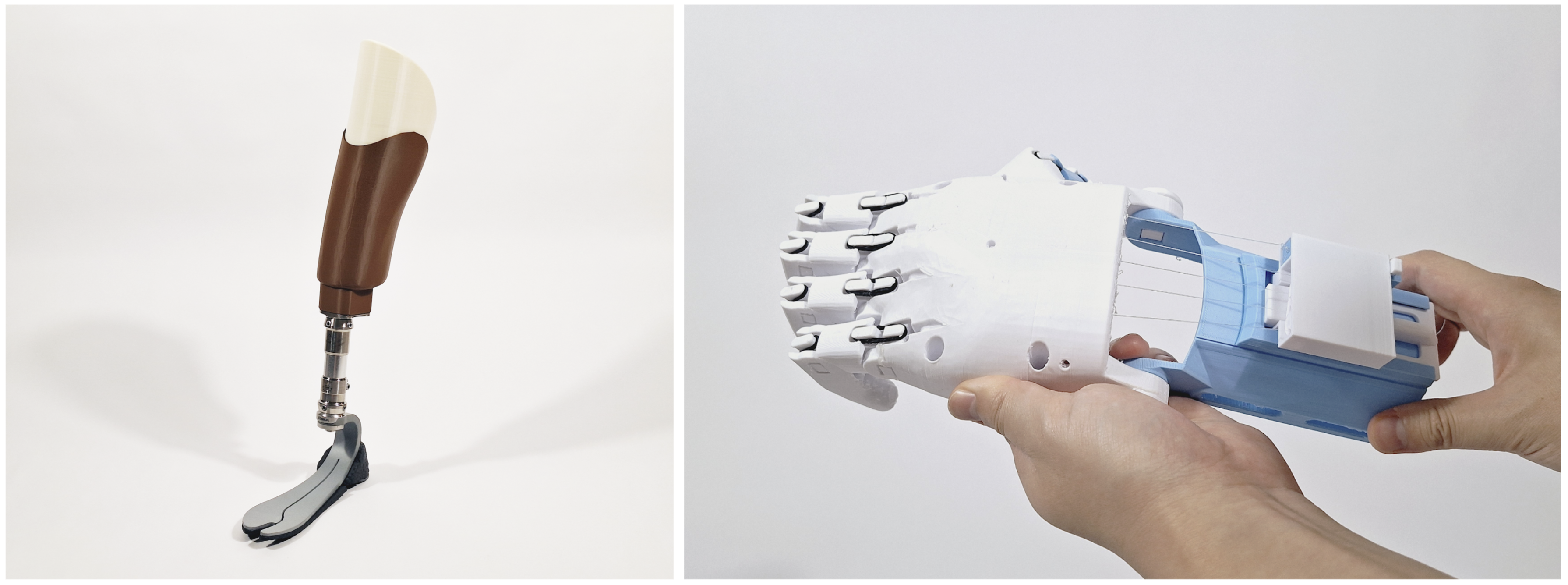

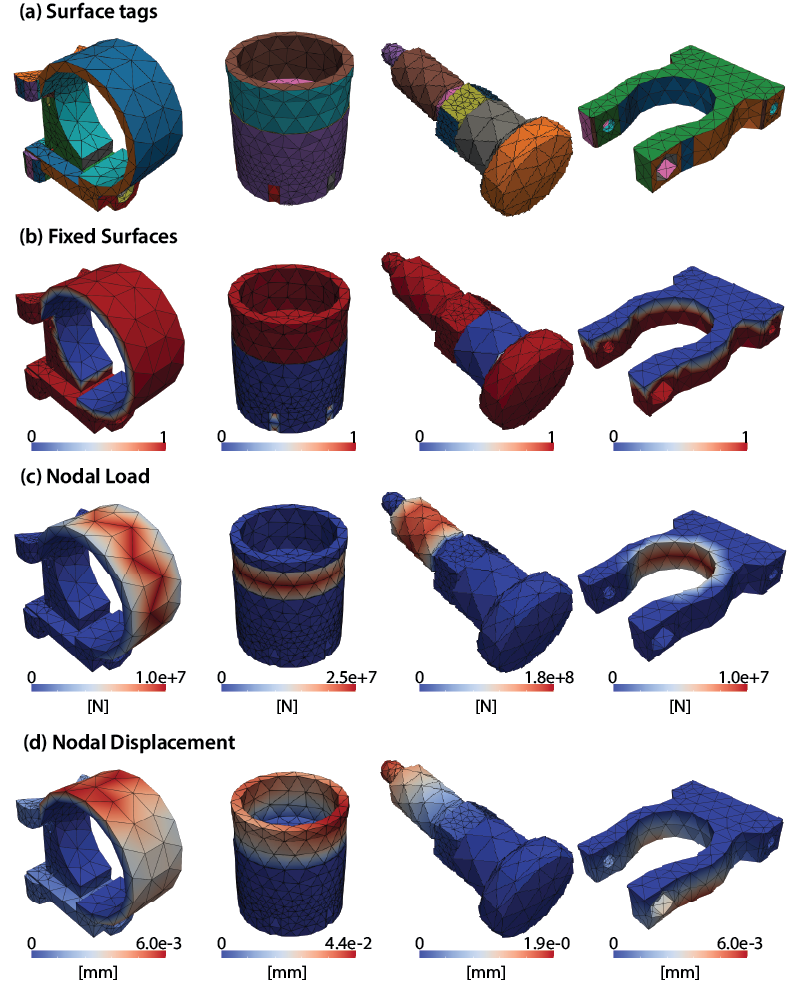

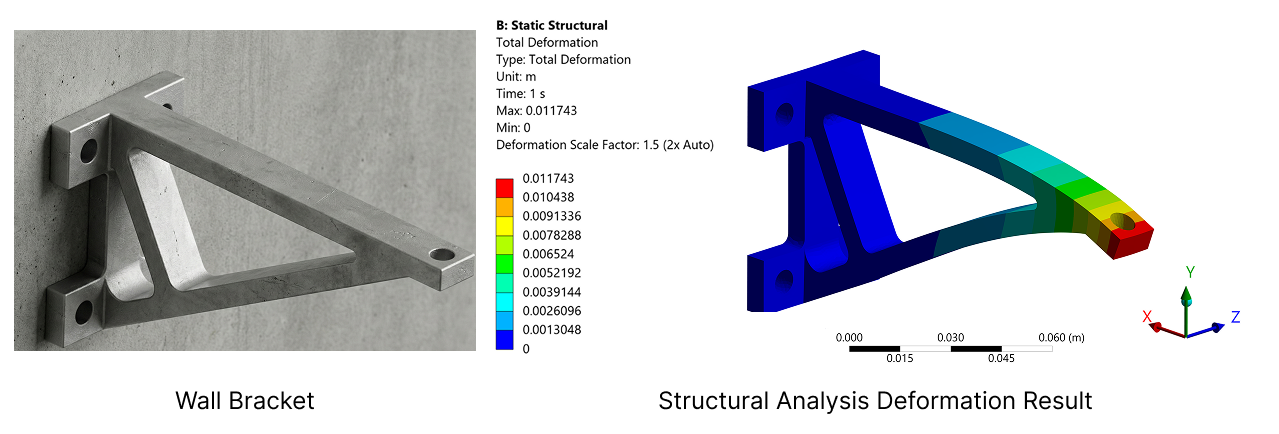

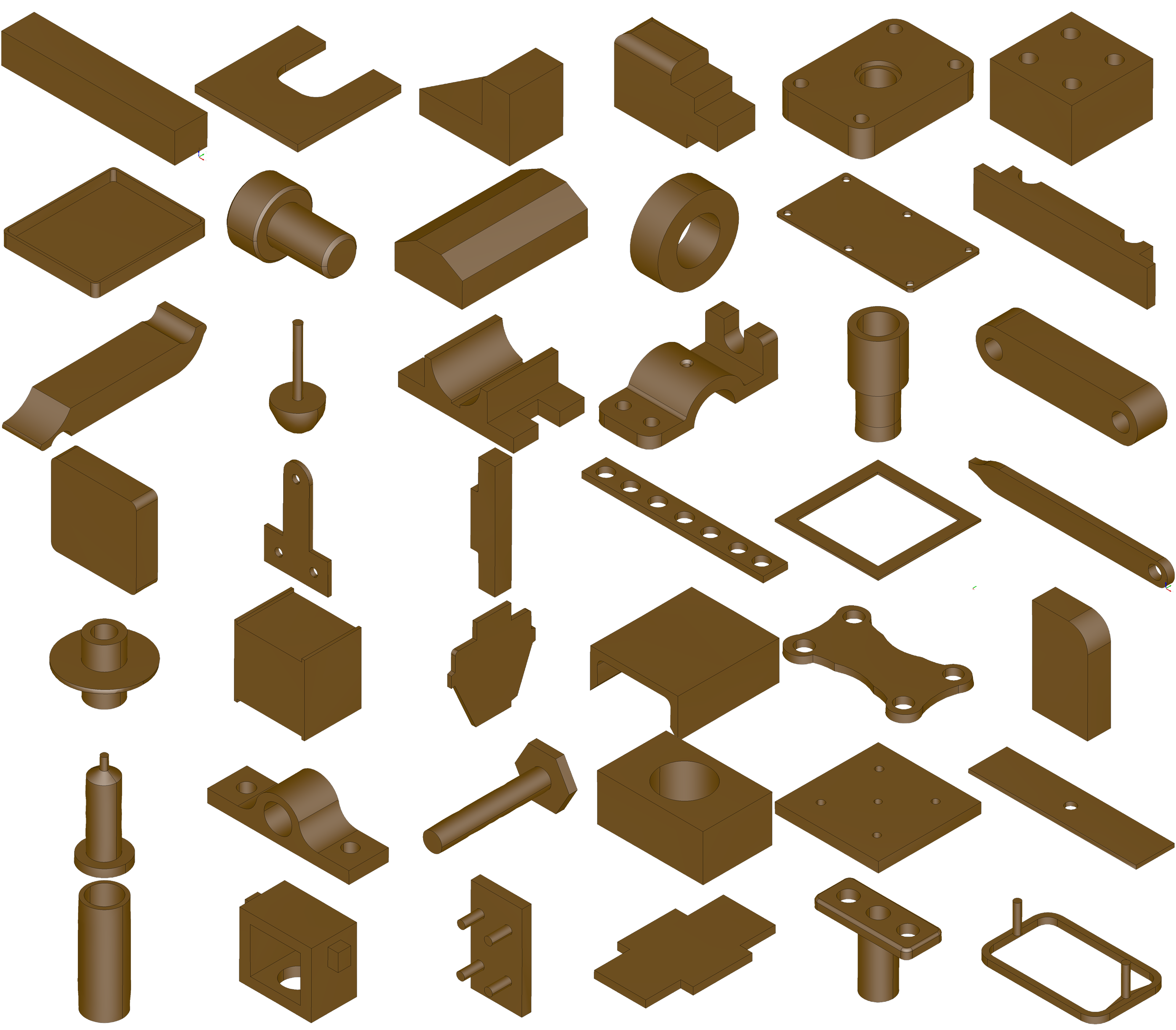

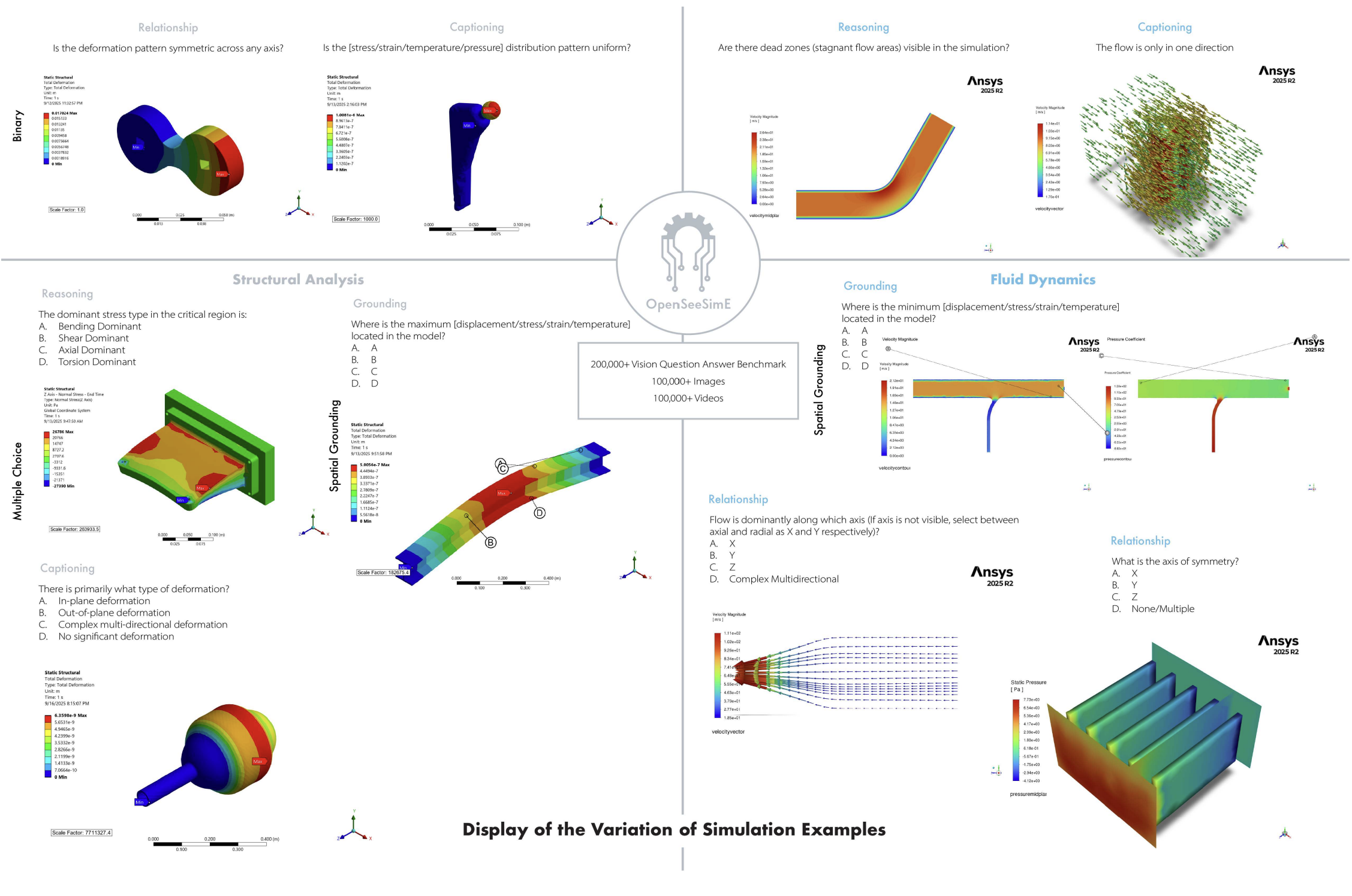

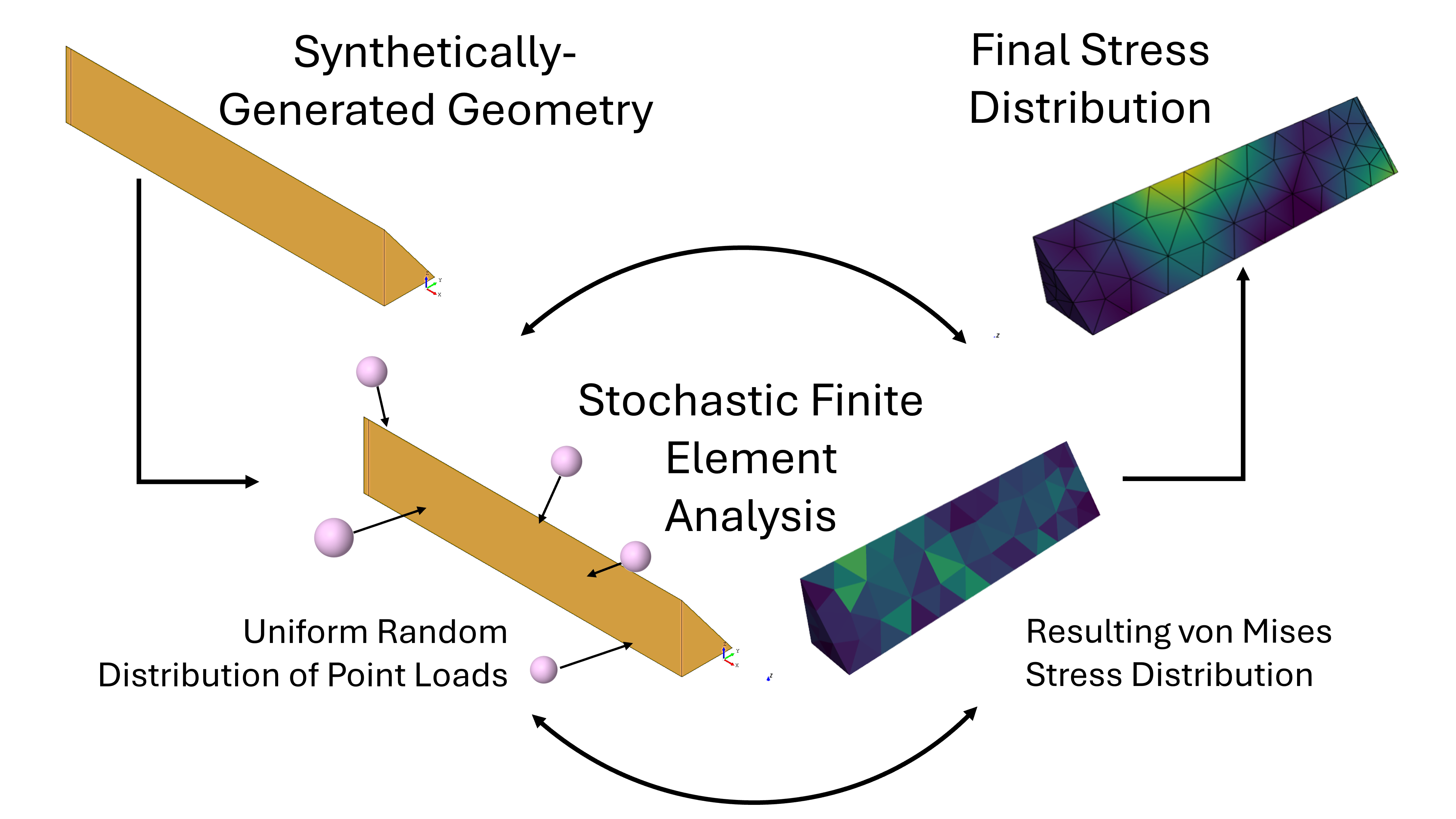

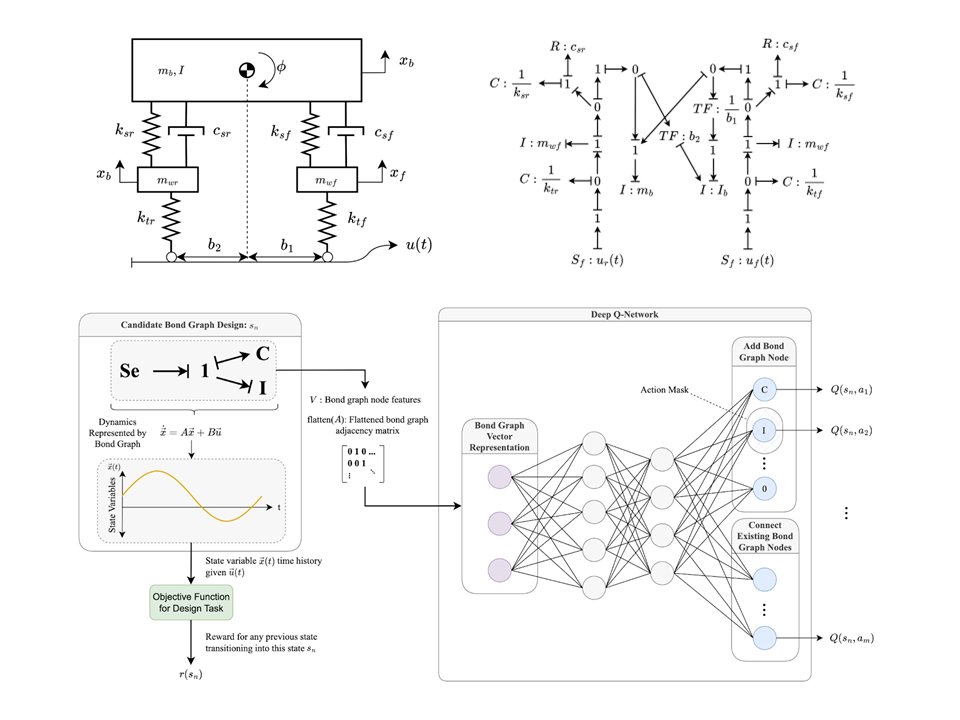

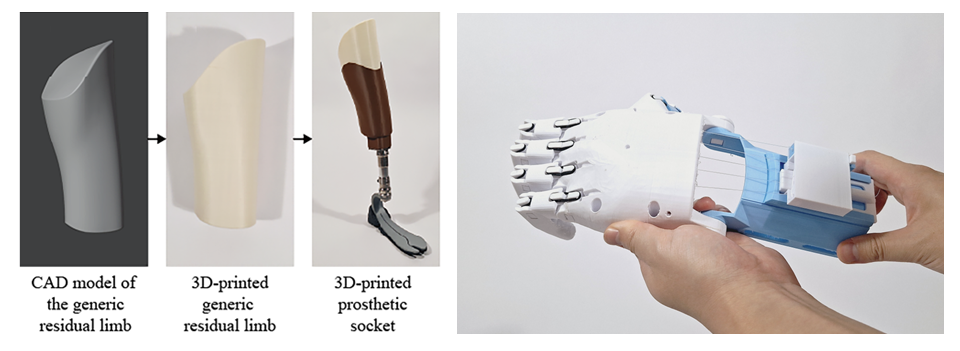

Our research integrates artificial intelligence with engineering design to create better products for more people, with fewer resources. We work on fundamental challenges in trustworthy AI for design simulations, accessible manufacturing, and adaptive intelligent systems.

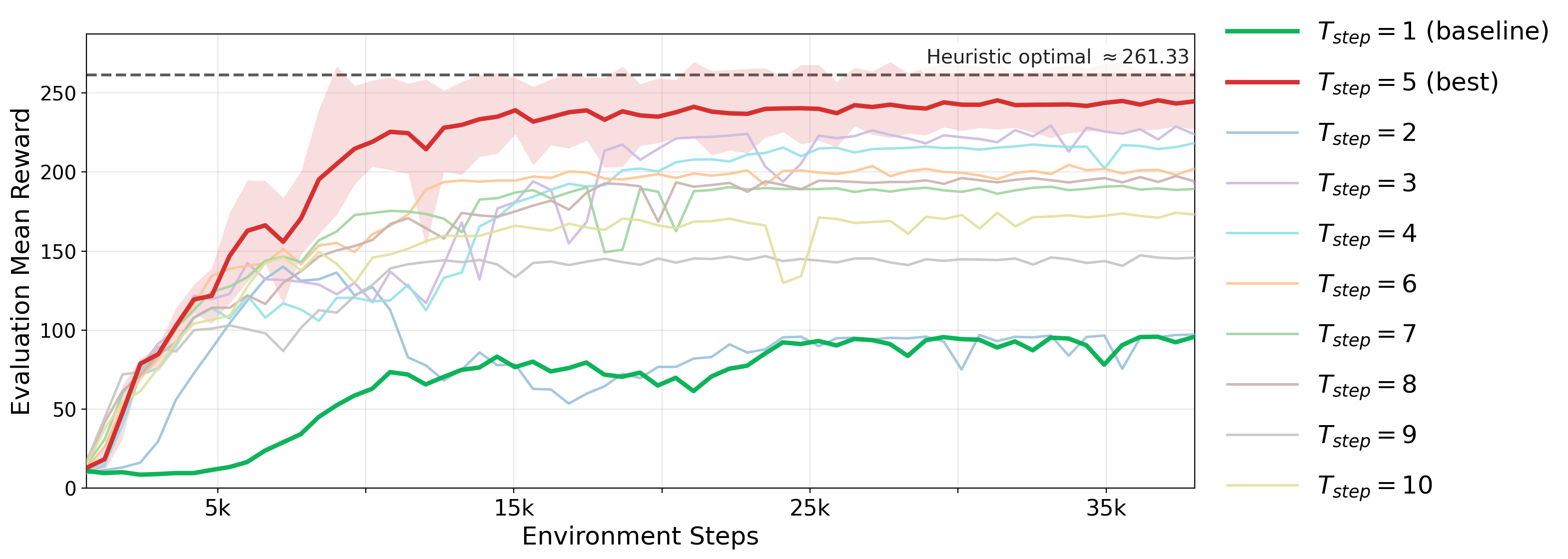

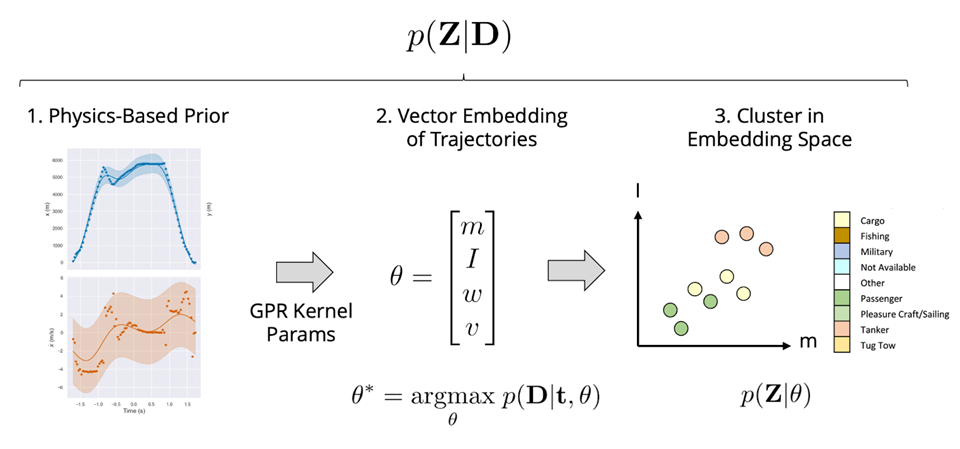

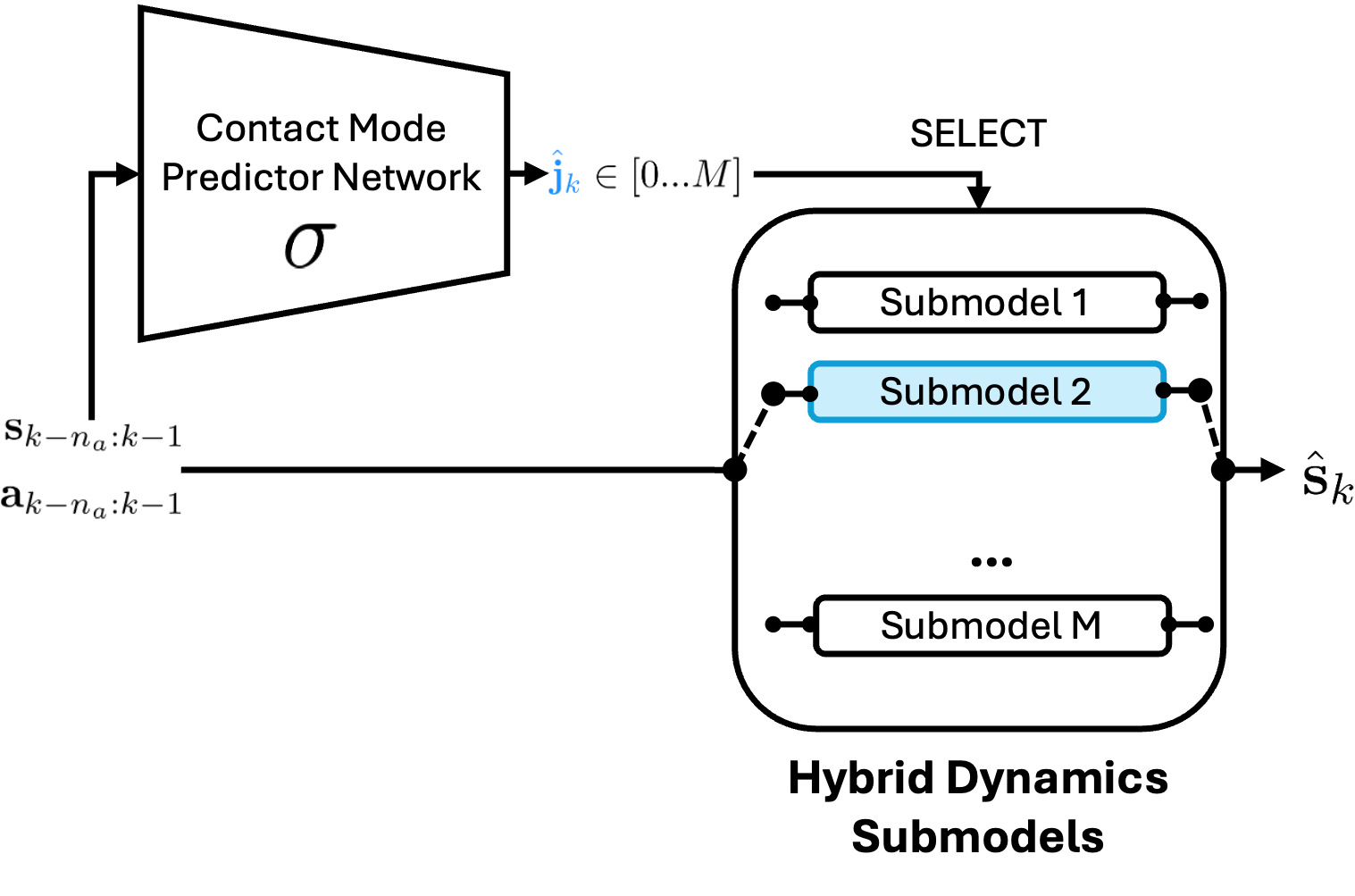

Our work spans multiple disciplines such as mechanical engineering, machine learning, robotics, and biomedical engineering, and is grounded in both theoretical rigor and practical validation. Current projects range from teaching AI to interpreting engineering simulations, to generating and evaluating design, to training robots that learn physics-based models of their dynamics.